Others dabble. We deliver.

Senior engineers driving accelerated delivery — empowered by contextual AI.

We don’t replace engineers with AI. We turn senior engineers into force multipliers — delivering 3–5× higher throughput.

Senior teams + contextual intelligence = more roadmap delivered, fewer defects, faster business alignment.

Delivery built around senior expertise, not just AI-assisted coding

According to Dora, more than 90% of CIOs and IT leaders have already adopted generative AI, often through AI-assisted tools like Copilot or Cursor.

Yet very few see sustained improvements in:

- Time-to-value

- Roadmap capacity

- Delivery reliability

The reason isn’t the tools.

It’s the delivery model.

Most organizations still rely on sequential delivery models — discover, build, test, deploy — where progress depends on handoffs and throughput scales only by adding people.

AI can accelerate tasks. But tools alone don’t improve delivery outcomes. The result:

AI can accelerate tasks. But tools alone don’t improve delivery outcomes. The result:

Faster typing, not faster outcomes

More rework and defects, not higher quality

Senior engineers are becoming bottlenecks instead of multipliers

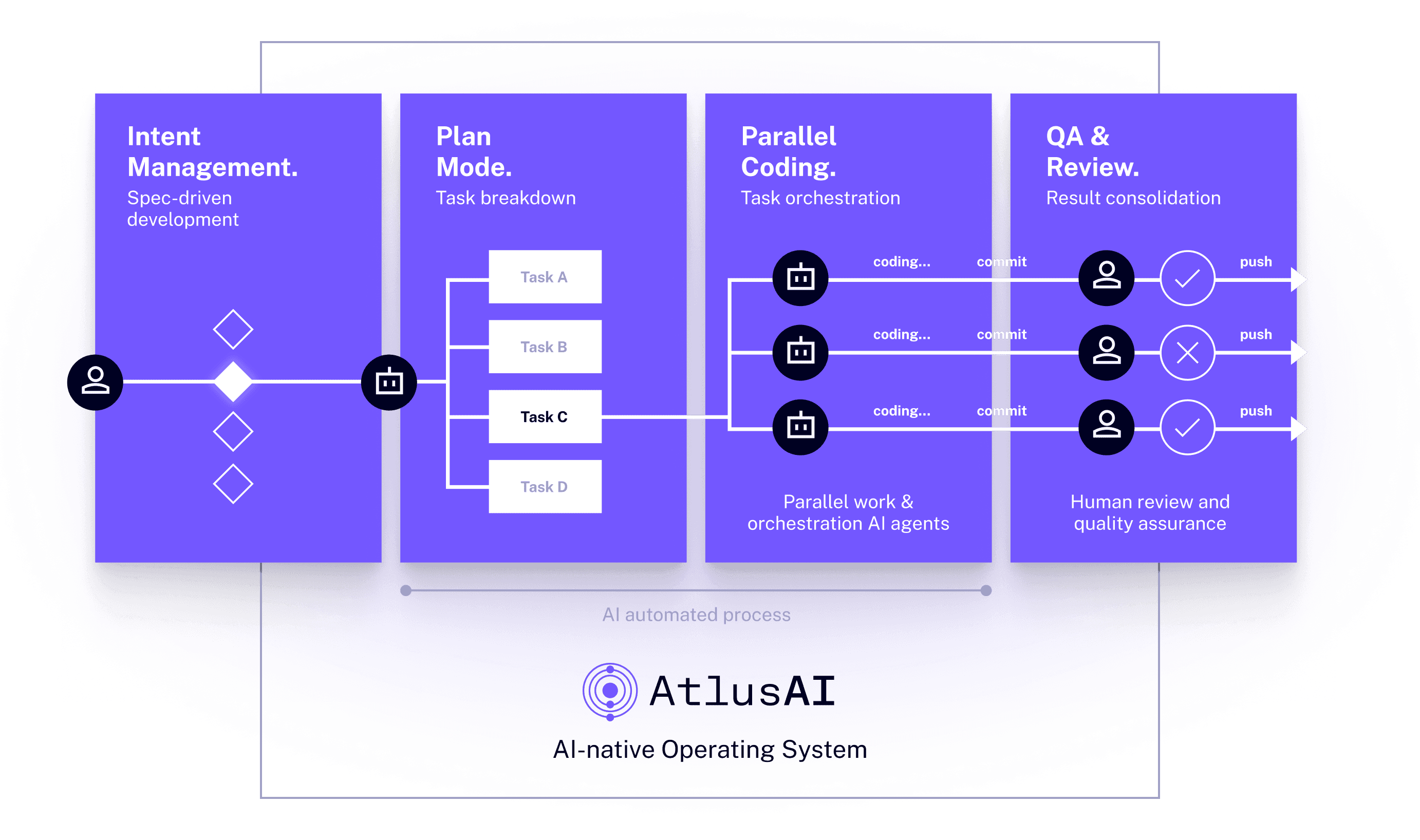

AI-native delivery isn’t about automation replacing people. It’s about parallelizing work under expert guidance.

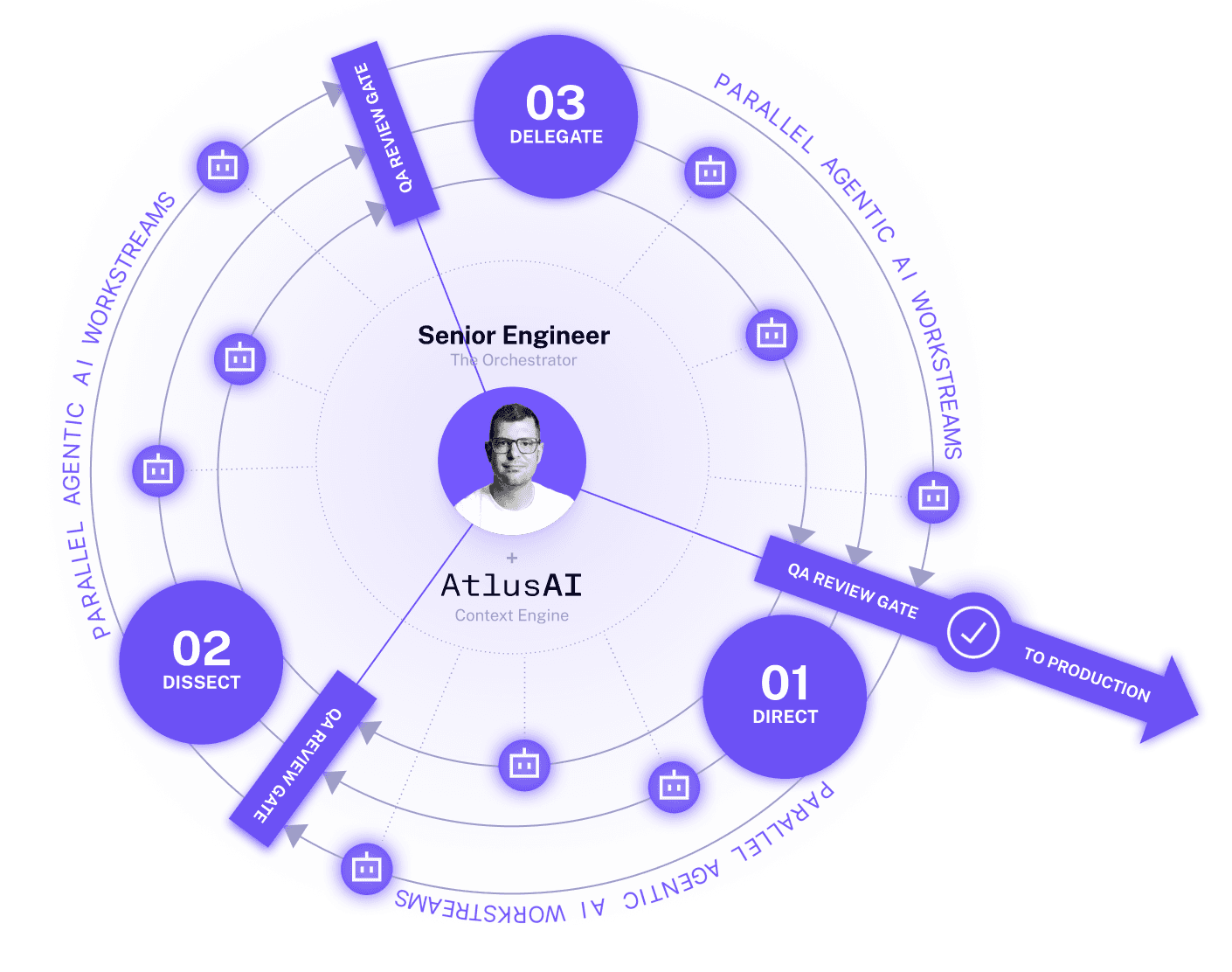

Our delivery model:

- Decomposes work into independent threads

- Advances them simultaneously

- Keeps every contributor — human and AI — aligned through shared context and clear guardrails

This is how senior engineers become multipliers instead of bottlenecks. Until the delivery model changes, AI will not deliver enterprise-level ROI.

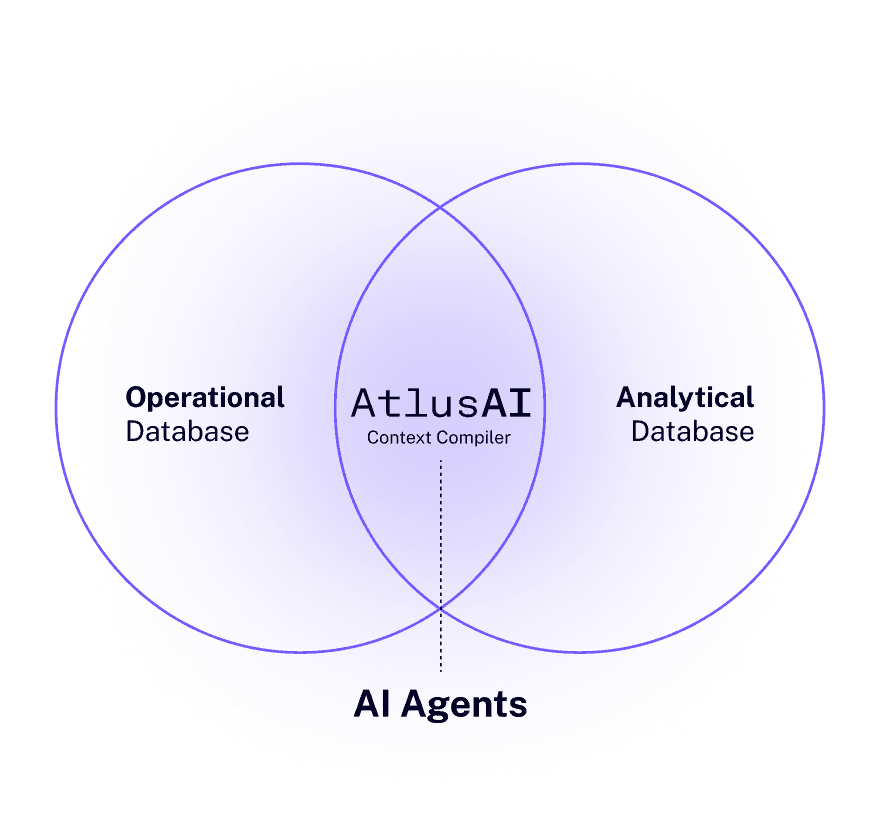

AtlusAI: Contextual intelligence that accelerates expert delivery

Parallel delivery at enterprise scale requires more than off-the-shelf tools.

It requires a system that captures decisions, intent, and execution state, so senior engineers (and the AI supporting them) can operate safely in parallel.

Our delivery framework combines seasoned engineering judgment with purpose-built intelligence to reduce coordination drag and unlock parallel execution.

Technology accelerates — it does not replace — expert decision-making.

How contextual intelligence supports agentic, parallel delivery

Shared delivery context

Unifies business goals, architectural decisions, and live execution state so parallel work stays aligned and production-ready.

AI agents under senior oversight

Enterprise-grade agents execute repeatable tasks in parallel, guided by senior engineers and governed by shared guardrails.

Decision capture and workflow automation

Key decisions, code changes, and outcomes are continuously documented — reducing knowledge loss and coordination overhead.

Built-in governance frameworks

Quality, security, and compliance controls are embedded directly into execution, so speed never compromises trust.

AtlusAI gives senior engineers and their AI agents the clarity required to build safely, quickly, and in parallel.

Proven impact in production

AtlusAI gives senior engineers clarity, continuity, and leverage so teams can move faster with confidence.

In production environments, teams using our delivery model experience:

85%

faster onboarding — engineers move from days to hours

80-90%

reduction in QA effort through automated workflows

75%

less meeting and demo prep time for delivery teams

Hours-to-seconds

decision traceability across code, tickets, and conversations

Why contextual AI is the difference

Parallel execution only works when every contributor operates with shared, continuously updated context.

Without it:

- Work drifts

- Quality degrades

- Risk compounds

Context keeps parallel delivery safe, scalable, and reliable.

By embedding business intent, architectural decisions, and execution state into a unified context layer, every thread remains aligned — and senior engineers stay in control.

This is what turns AI from productivity theater into real delivery leverage.

Why AI ROI stalls

Faster typing does not equal faster delivery. Parallel, senior-led execution does.

Limited experience in AI delegation

Engineers treat AI like a chatbot; one-way conversation. Without senior interference, introduces system risk.

Lack of context hinders quality

Weak interfaces & documentation → agent drift. Unchecked code + high defect rates hinder AI’s impact in production.

Faster typing ≠ faster delivery

Teams still operate sequentially, not in parallel. Flow breaks and context switching erase gains.

Real outcomes. Real environments.

When delivery becomes parallel and senior engineers are given leverage, the results are visible, defensible, and board-level.

Get started

Parallel coding assessment (2–3 weeks)

Identify parallelization opportunities, AI leverage points, and projected ROI.

Parallel coding pilot (4–6 weeks)

Apply parallel coding to a real backlog item and see measurable results fast.

AI-accelerated senior pods

Ongoing delivery using our parallel engineering operating system.

End-to-end modernization

Full re-architecture and delivery at parallel-native speed.

Ready to increase throughput — not headcount?

Let’s map where AtlusAI can unlock immediate velocity in your roadmap.